Our Services

What We Build with MySQL

From MVPs to enterprise systems, we deliver production-ready solutions that scale.

ACID Compliance & Data Integrity for Mission-Critical Applications

We ensure 99.99% data integrity using SQL databases' ACID properties (Atomicity, Consistency, Isolation, Durability) essential for financial transactions, healthcare records, and inventory management. MySQL's InnoDB engine and PostgreSQL's advanced transaction management prevent data corruption, handle concurrent operations safely, and maintain consistency during failures. Essential for applications requiring: financial transaction accuracy, inventory tracking without overselling, audit trails for compliance, and concurrent user operations without conflicts. We implement proper transaction isolation levels, deadlock prevention strategies, and rollback mechanisms ensuring data remains consistent even during system failures or high concurrent load.

Advanced Indexing & Query Optimization for 80% Faster Performance

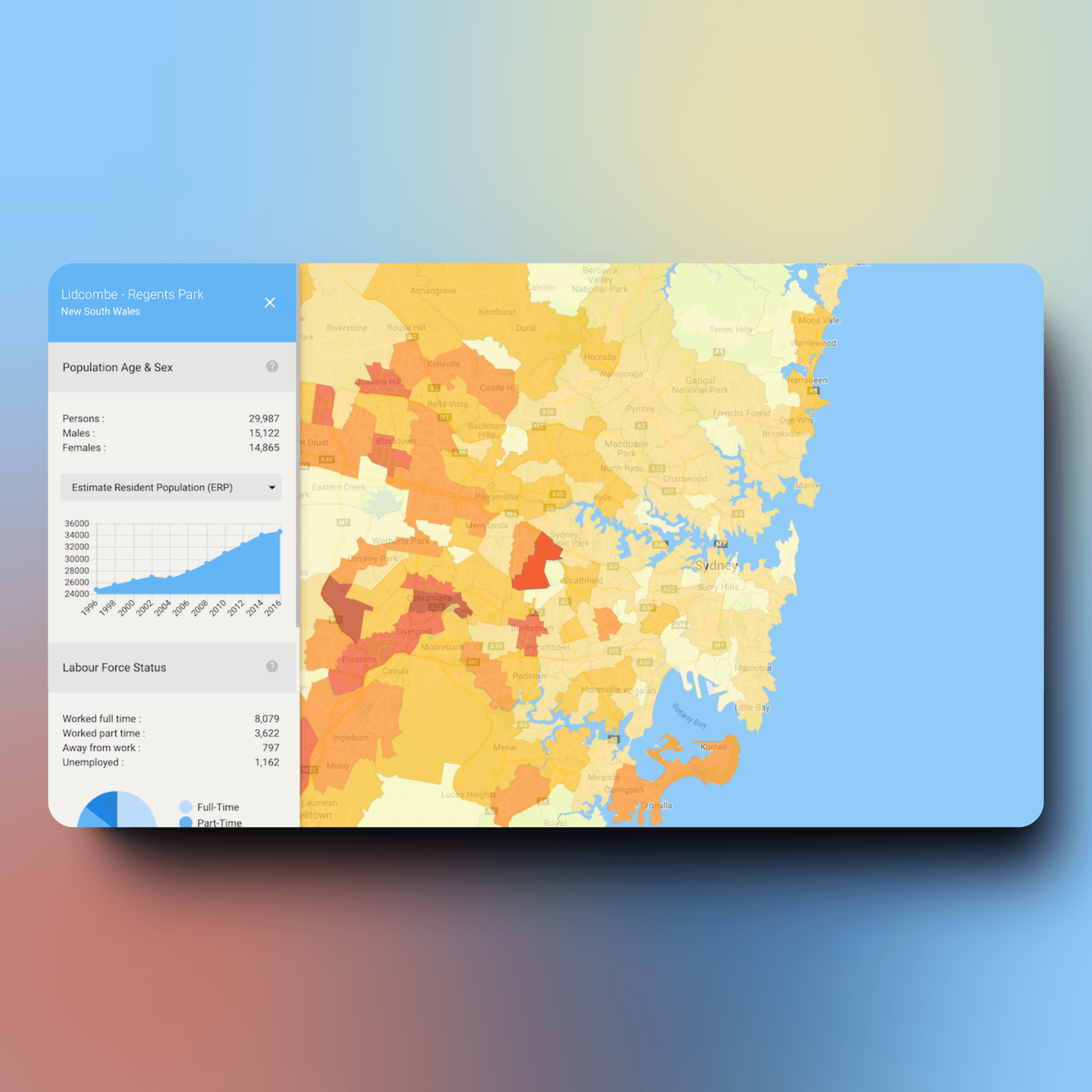

We reduce query response times by 80% through strategic indexing, query optimization, and execution plan analysis. MySQL supports B-tree indexes, full-text search indexes, and spatial indexes for geographic data. PostgreSQL adds partial indexes, expression indexes, and GiST/GIN indexes for advanced data types. We analyze slow queries using EXPLAIN plans, create composite indexes for multi-column searches, implement covering indexes avoiding table lookups, and use query caching strategies. For analytics workloads, we design column-oriented storage patterns and materialized views. Essential for applications with: large datasets (millions+ records), complex JOIN operations, full-text search requirements, and sub-100ms response time needs.

High Availability with Replication & Automatic Failover

We implement 99.99% uptime using master-slave replication, multi-master clustering, and automatic failover strategies. MySQL supports asynchronous/semi-synchronous replication with read replicas for load distribution. PostgreSQL provides streaming replication with hot standby servers. We configure automatic failover using tools like ProxySQL, PgBouncer, and orchestration systems (Patroni, Stolon) ensuring minimal downtime during server failures. Read replicas distribute query load reducing primary server burden by 70%. Essential for applications requiring: 24/7 availability, geographic distribution, disaster recovery, and handling traffic spikes without degradation. We implement monitoring and alerting ensuring operations teams are notified of replication lag or failures.

Scalability from Startup to Enterprise: Vertical & Horizontal Strategies

SQL databases scale vertically (more powerful servers) and horizontally (database sharding, read replicas). We start with single-server deployments for startups, add read replicas as traffic grows, implement connection pooling with PgBouncer/ProxySQL for efficient resource usage, and design sharding strategies for datasets exceeding single-server capacity. PostgreSQL handles up to 100TB+ with proper partitioning. MySQL powers platforms like Facebook and Twitter at massive scale. For e-commerce, we implement database-per-tenant for SaaS multi-tenancy. We design auto-scaling strategies with cloud providers (AWS RDS, Azure Database, Google Cloud SQL) enabling growth from 1,000 to 10 million users without database rewrites.

Comprehensive Security: Encryption, Access Control & Audit Logging

We implement multi-layered database security with encryption at rest (AES-256), encryption in transit (SSL/TLS), role-based access control (RBAC), row-level security in PostgreSQL, and comprehensive audit logging. MySQL and PostgreSQL support user permission management at database, table, and column levels. We implement: parameterized queries preventing SQL injection, least-privilege access principles, password policies with rotation, IP whitelisting for network security, and audit logging tracking all data modifications. Essential for applications handling: sensitive customer data, financial information, protected health information (HIPAA), and requiring SOC 2/ISO 27001 compliance. We configure automated security updates and vulnerability scanning.

Automated Backups & Point-in-Time Recovery for Data Protection

We implement comprehensive backup strategies with automated daily full backups, incremental backups every hour, point-in-time recovery (PITR) enabling restoration to any second, and geographic backup distribution for disaster recovery. MySQL supports logical backups (mysqldump) and physical backups (Percona XtraBackup). PostgreSQL provides continuous archiving with Write-Ahead Logging (WAL). We test recovery procedures monthly ensuring backups are restorable. Retention policies maintain 30 days of daily backups and 12 months of monthly backups. Essential for compliance requirements and protecting against: hardware failures, human errors (accidental deletions), ransomware attacks, and data corruption. Recovery time objectives (RTO) of under 15 minutes and recovery point objectives (RPO) of under 5 minutes for critical systems.

Database Choices: MySQL vs PostgreSQL vs SQLite

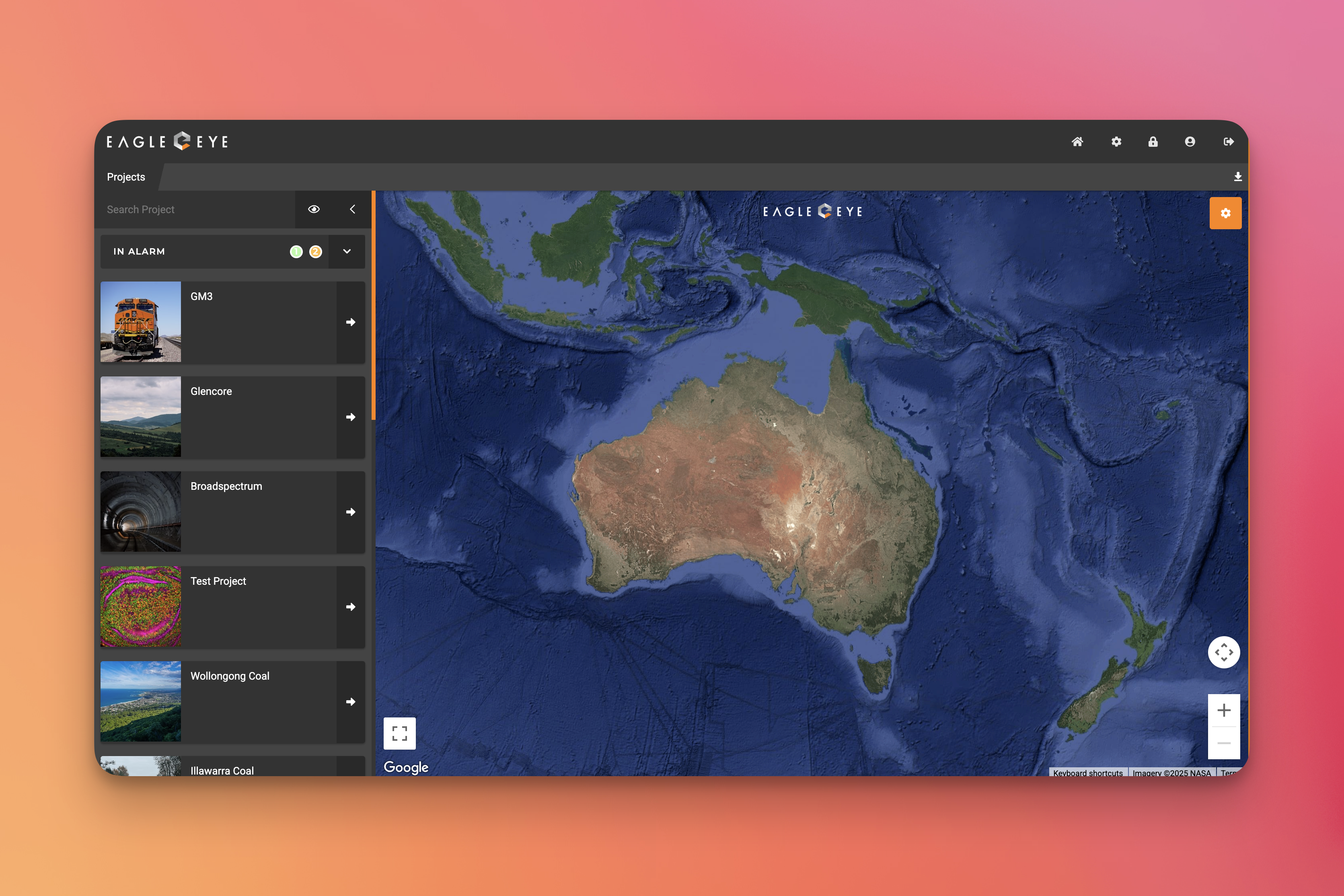

We help choose the right SQL database for your needs. MySQL excels at: high-read web applications, e-commerce platforms, content management systems, simple transactional systems, and applications requiring horizontal scaling with read replicas. PostgreSQL is ideal for: complex queries with JOINs and subqueries, financial applications requiring strict ACID compliance, GIS applications with PostGIS, JSON data storage with indexing, and advanced data types (arrays, hstore, JSONB). SQLite works best for: mobile applications, embedded systems, local desktop applications, development/testing environments, and edge computing with limited resources. We often combine databases—using MySQL for primary application data, PostgreSQL for analytics, Redis for caching, and MongoDB for flexible schemas.

Zero-Downtime Migrations & Database Version Upgrades

We execute database migrations with zero downtime using blue-green deployment strategies, online schema changes (pt-online-schema-change, pg_repack), and backward-compatible migration patterns. For version upgrades, we perform comprehensive testing in staging environments, use logical replication for PostgreSQL major version upgrades, and implement rollback strategies. Application-level migrations with tools like Flyway, Liquibase, TypeORM Migrations, and Alembic ensure database schema stays synchronized with application code. We handle: adding/removing columns without locking tables, migrating from MySQL to PostgreSQL (or vice versa), consolidating multiple databases, and refactoring schemas for performance. All migrations include data validation ensuring integrity throughout the process.